Have you ever wondered why forecasters seem to not always agree? This is because they use various different models that do not necessarily account for the same things. The big question is, which models actually provide the most accurate forecasts? The M4 competition seeks to answer this by asking entrants to forecast 100,000 time series with data ranging from hourly to annually over horizons covering 48 hours – 6 years.

Jennifer Castle, Jurgen Doornik, and David Hendry, entered the competition to test how their methods compared. 248 teams registered to participate, but only 50 managed to complete all the forecasts. Of those entrants, they ranked 9th overall on forecast accuracy and 3rd on interval forecasts, where the right level of uncertainty has to be accounted for. They had the best accuracy for hourly forecasts. To do so, they developed two fast and robust methods (Delta, which dampens the sample average growth rates, and Rho, which estimates an adaptive autoregressive model) using the statistical software Ox and OxMetrics. They then calibrated their average (called Card).

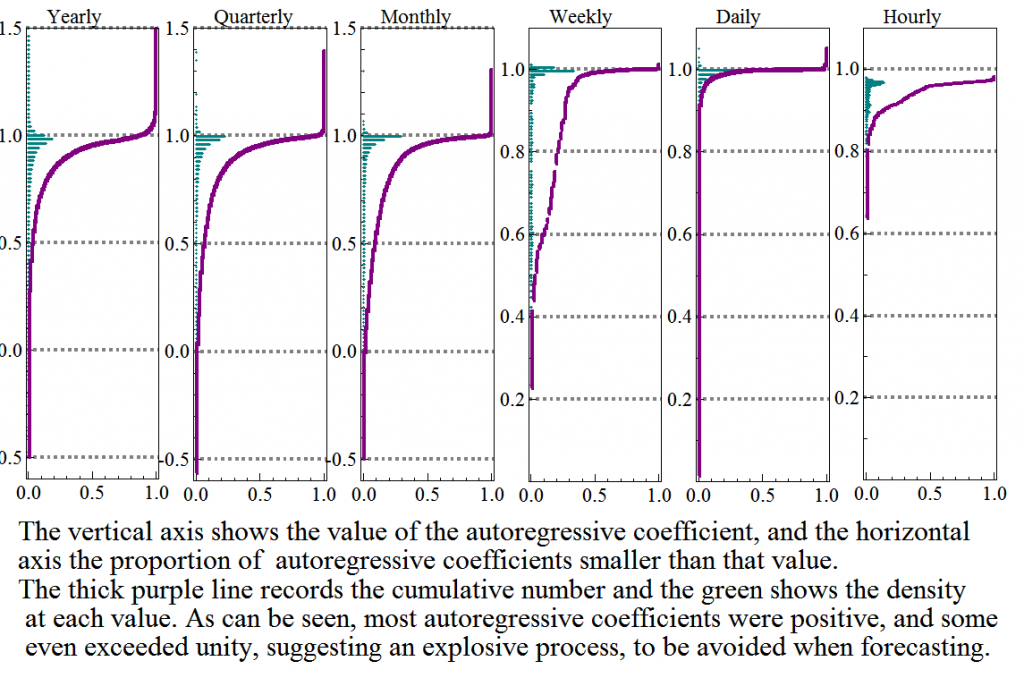

The figure shows the distribution of the first-order autoregressive coefficients for the logs of the variables across the 100,000 time series, organised by data frequency.

In their article, Card forecasts for M4, in the International Journal of Forecasting, they describe the Card method in detail. Card forecasts provide a useful univariate benchmark against which to evaluate any time series forecasts, with the aim of improving future forecasts and forecasts intervals.

Jennifer Castle, Tutorial Fellow in Economics, Magdalen College, University of Oxford

Jurgen Doornik, Research Fellow, Climate Econometrics, Nuffield College

David Hendry, Director of Climate Econometrics, Nuffield College